GNU Radio Performance 1/X

I recently started at the Secure Mobile Network Lab at TU Darmstadt, where I work on Software Defined Wireless Networks. For the time, I look into real-time data processing on normal PCs (aka the GNU Radio runtime). I’m really happy for the chance to work in this area, since it’s something that interests me for years. And, now, I have some time to have a close look at the topic.

In the last weeks, I experimented quite a lot with the GNU Radio runtime and new tools that helped me to get some data out of it. Since I didn’t find a lot of information, I wanted to start a series of blog post about the more technical bits. (A more verbose description of the setup is available in [1]. The code and scripts are also on GitHub.)

Setting up the Environment

The first question was how to setup an environment that allows me to conduct reproducible performance measurements. (While this was my main goal, I think most information in this post can also be useful to get a more stable and deterministic throughput of your flow graph.) Usually, GNU Radio runs on a normal operating system like Linux that’s doing a lot of stuff in the background. So things like file synchronization, search indexes that are updated, cron jobs, etc. might interfere with measurements. Ideally, I want to measure the performance of GNU Radio and nothing else.

CPU Sets

The first thing that I had to get rid of was interference from other processes that are running on the same system. Fortunately, Linux comes with CPU sets, which allow to partition the CPUs and migrate all process to a system set, leaving the other CPUs exclusively for GNU Radio. Also new processes will, by default, end up in the system set.

On my laptop, I have a 8 CPUs (4 cores with hyper-threads) and wanted to dedicate 2 cores with their hyper-threads to GNU Radio.

Initially, I assumed that CPUs 0-3 would correspond to the first two cores and 4-7 to the others.

As it turns out, this is not the case.

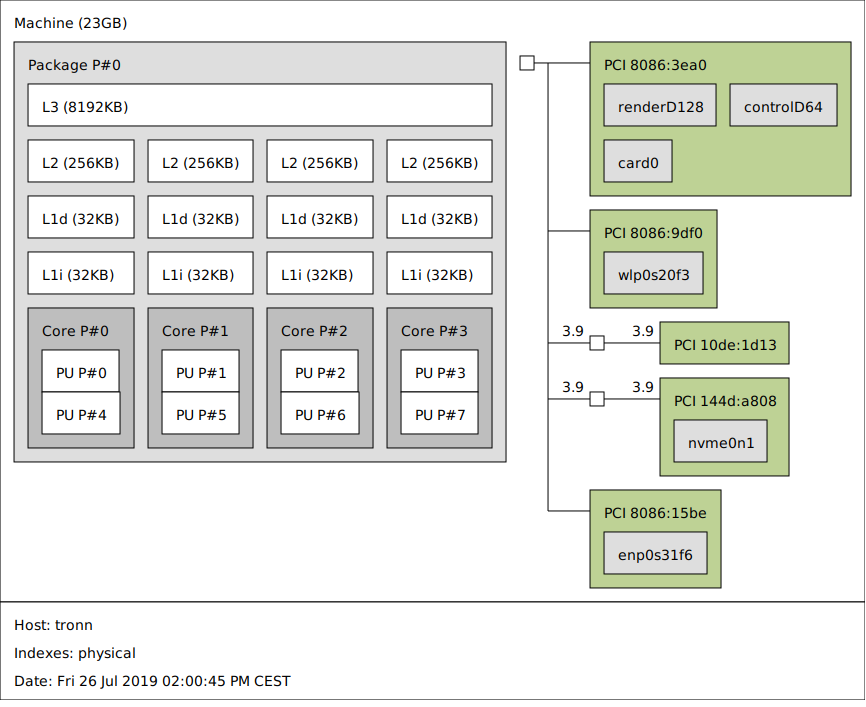

The lstopo command (on Ubuntu part of the hwloc package) gives a nice overview:

As we can see from the figure, it’s actually CPU 2,3,6,7 that correspond to core 2 and 3. To create a CPU set sdr with CPUs 2,3,6, 7, I ran:

sudo cset shield --sysset=system --userset=sdr --cpu=2,6,3,7 --kthread=on

sudo chown -R root:basti /sys/fs/cgroup/cpuset

sudo chmod -R g+rwx /sys/fs/cgroup/cpuset

The latter two commands allow my user to start process in the SDR CPU set.

The kthread options tries to also migrate kernel threads to the system CPU set.

This is not possible for all kernel threads, since some have CPU-specific tasks, but it’s the best we can do.

Starting a GNU Radio flow graph in the SDR CPU set can be done with:

cset shield --userset=sdr --exec -- ./run_flowgraph

(As a side note: Linux has a kernel parameter isolcpus that allows to isolate CPUs from the Linux scheduler. I also tried this approach, but as it turns out, it really means what it says, i.e., these cores are excluded from the scheduler. When I started GNU Radio with an affinity mask of the whole set, it always ended up on a single core. Without a scheduler, there are not task migrations to other cores, which renders this approach useless.)

IRQ Affinity

Another issue are interrupts.

If, for example, the GPU or network interface constantly interrupts the CPU where GNU Radio is running, we get lots of jitter and the throughput of the flow graph might vary significantly over time.

Fortunately, many interrupts are programmable, which means that they can be assigned to (a set of) CPUs.

This is called interrupt affinity and can be adjusted through the proc file system.

With watch -n 1 cat /proc/interrupts it’s possible to monitor the interrupt counts per CPU:

CPU0 CPU1 CPU2 CPU3 CPU4 CPU5 CPU6 CPU7

0: 12 0 0 0 0 0 0 0 IR-IO-APIC 2-edge timer

1: 625 0 0 0 11 0 0 0 IR-IO-APIC 1-edge i8042

8: 0 0 0 0 0 1 0 0 IR-IO-APIC 8-edge rtc0

9: 103378 43143 0 0 0 0 0 0 IR-IO-APIC 9-fasteoi acpi

12: 17737 0 0 533 67 0 0 0 IR-IO-APIC 12-edge i8042

14: 0 0 0 0 0 0 0 0 IR-IO-APIC 14-fasteoi INT34BB:00

16: 0 0 0 0 0 0 0 0 IR-IO-APIC 16-fasteoi i801_smbus, i2c_designware.0, idma64.0, mmc0

31: 0 0 0 0 0 0 0 100000 IR-IO-APIC 31-fasteoi tpm0

120: 0 0 0 0 0 0 0 0 DMAR-MSI 0-edge dmar0

[...]

In my case, I wanted to exclude the CPUs from the SDR CPU set from as many interrupts as possible.

So I tried to set a mask of 0x33 for all interrupts.

In binary, this corresponds to 0b00110011 and selects CPU 0,1,4,5 of the system CPU set.

for i in $(ls /proc/irq/*/smp_affinity)

do

echo 33 | sudo tee $i

done

Another potential pitfall is the irqbalance daemon, which might work against us by reassigning the interrupts to the CPU that we want to use for signal processing.

I, therefore, disabled the service during the measurements.

sudo systemctl stop irqbalance.service

CPU Governors

Finally, there is CPU frequency scaling, i.e., individual cores might adapt the frequencies based on the load of the system. While this shouldn’t be an issue if the system is fully loaded, it might make a difference for bursty loads. In my case, I mainly wanted to avoid initial transients and, therefore, set the CPU governor to performance, which should minimize frequency scaling.

#!/bin/bash

set -e

if [ "$#" -lt "1" ]

then

GOV=performance

else

GOV=$1

fi

CORES=$(getconf _NPROCESSORS_ONLN)

i=0

echo "New CPU governor: ${GOV}"

while [ "$i" -lt "$CORES" ]

do

sudo cpufreq-set -c $i -g $GOV

i=$(( $i+1 ))

done

I hope this was somewhat helpful. In the next post will do some performance measurements.

-

Bastian Bloessl, Marcus Müller and Matthias Hollick, “Benchmarking and Profiling the GNU Radio Scheduler,” Proceedings of 9th GNU Radio Conference (GRCon 2019), Huntsville, AL, September 2019. [BibTeX, PDF and Details…]